I vividly remember one of my freshman-year history professors saying there were a handful of texts missing from the written record that he would sacrifice “a finger or two” to read. I was taken aback, but when I thought about it, I realized I felt a similar yearning towards literature–specifically, the entirety of it. A finger might not be the worst price for being able to absorb every book ever written all at once, like Keanu Reeves learning Kung Fu in The Matrix.

That was fourteen years ago. While I would no longer consider the dismemberment deal, I’m still continually frustrated with how little it’s possible to read in a lifetime. Early last year, after I used ChatGPT for the first time, my most acute hope was that the large language model could become my personal reading guide, saving me hours of searching, scrolling, and shelf-scanning by providing me with tailored recommendations. Unfortunately, my initial attempts to solicit these from GPT-3.5 and early GPT-4 were disappointing, yielding far too many fabricated article titles and joyless vehicles of SEO optimization. Nowadays, though, simple prompts to ChatGPT return recommendations good enough that I find myself asking for them every week or so.

For example, a few months ago I wrote a two-sentence prompt asking ChatGPT for thought-provoking, evergreen, mid-length reads on technology. The resulting list included Tom Wolfe’s fantastic Esquire profile of “Mayor of Silicon Valley” Robert Noyce (1983), a rollicking Wired feature in which a “hacker tourist” traverses fiberoptic cables across three continents (1996), and the classic Asimov short story “The Last Question” (1956). Meanwhile, the (necessarily blunter) search-engine versions of this query, “best journalism about technology,” “evergreen long reads about technology,” get me to a much blander slate of options–mostly publications’ “top technology stories” lists and SEO-driven aggregations with titles like “best tech journalists of 2023.”

Occasionally asking ChatGPT for article recommendations, however, is different from using it as a personal reading guide. That habit requires a significant investment of time, given the care it would take to craft detailed prompts about my learning goals, keep them updated, and follow the responses’ advice.

Closer interaction with the chatbot would also mean spending more time sifting through its missteps. LLMs do not understand the meaning of text like humans do; they predict the next word in a given sequence, using statistical probabilities gleaned from the materials they have ingested. This often produces accurate textual output, but other times inaccuracies or nonsense, referred to as “hallucinations.” There would also be poor critical reading abilities to contend with; OpenAI benchmark data revealed last year that GPT 4 got a failing score of 2 (out of 5) on the AP Literature and AP Language and Composition exams, compared to the 4 or 5 it received on all 13 other AP exams it took.

At the same time, I can’t stop imagining the model’s training data as a Matrix-like network of literary near-omniscience: hundreds of thousands of books, millions of web pages. How could I–a person who feels mildly self-congratulatory these days when I get through a novel in two weeks–not believe I could benefit from such a “well-read” machine’s insight?

A few weeks ago, I decided to explore what it would be like to use ChatGPT as a reading and learning guide. I considered doing this by spending several months immersed in the chatbot’s guidance, writing detailed prompts to elicit reading plans and recommendations for a subject I’m currently learning about. However, this approach would have major drawbacks. If I used both a combination of ChatGPT and other tools in the process, it would be difficult to know what benefits could be fairly credited to the former. If I decided to use only ChatGPT’s recommendations, though, I would be creating conditions too unrealistic to be fair; not even LLMs’ most fervent acolytes tout them as a replacement for everything. More importantly, neither situation would have a control–a way to evaluate my experience following ChatGPT’s recommendations alongside the experience of the version of me who would have searched for recommendations without one.

I believe a more instructive way to explore ChatGPT’s value as a reading guide is through a retrospective hypothetical. Through this method, I choose a subject I’ve already studied in the pre-LLM world and imagine, in detail, how I might have used ChatGPT to intervene in my learning back then. While neither this nor the immersion method is scientifically rigorous, I’m drawn to the retrospective hypothetical because it offers something more like a control: my memory of what I was like at an earlier stage of my educational journey, and of how my learning progressed without access to an LLM chatbot.

I let my present mood of nostalgia decide the test subject for me: English literature, my college major. How might I have used ChatGPT as a reading guide, if it had been released in 2010, when I was a college freshman, instead of 2022? Would it have been a useful learning tool, smoothing the curation process and adding valuable insights? Or would the shortcuts it provided have, in the long run, undercut my literary education?

The start of college was overwhelming. There was rarely enough time to gulp down all the assigned reading, never enough time to digest it, and almost always indecision about whether I had chosen the wrong meal. Faced with this type of indecision in a post-LLM world, I believe I would have created something like TS Eliot - Literary Influence Mapping GPT (see full prompt here, on my “Training the Replacement” Github repository).

TS Eliot GPT is a custom GPT–a personalized version of OpenAI’s model that anyone can create by describing the type of responses they want the model to return. (This process is not too different from writing a long prompt and reusing it. If you prefer to use another LLM, just copy and paste the full prompt into the chatbot of your choice.)

TS Eliot GPT first asks the user to specify a “central text”–any language-based work of art, such as a novel, play, poem, movie, short story, etc. Once the user provides this, the custom GPT provides its response in the following format (the following is a paraphrased version of the full prompt):

Introduction: Discusses the central text and its key themes. (~40 words)

Forerunners: Chronological list of 3-4 texts published before the central text, and that reputed literary critics consider artistic predecessors of the central text. Descriptions (~10 words each) of each Forerunner.

Successors: Chronological list of 3-4 texts published after the central text, and that reputed literary critics consider artistically indebted to the central text. Descriptions (~10 words each) of each Successor.

Content Analysis: (broken into 4 paragraphs)

Overview: High-level summary of how literary tradition has been influenced by the central text. (3 sentences)

Forerunners Analysis: Discussion of how literary interpretations of forerunner texts changed because of the central text. (5-6 sentences)

Successors Analysis: Discussion of how the central text influenced the successors, and how critics regarded it differently over time as the successors were published. (5-6 sentences)

Influence Level: Designation of whether the central text occupies a minor, moderate or major place in the literary tradition, and why. (4 sentences)

I named this GPT after TS Eliot, the famous modernist poet and critic, because, when I was an overwhelmed freshman, Eliot’s famous essay, “Tradition and the Individual Talent,” became my cognitive scaffolding for imagining relationships between artistic works. The essay describes a vast, complex lattice of Western literary tradition in which works sit next to one another as part of an “ideal order”–a system of “conformity between the old and the new.” Each new literary work that joins the tradition alters that order, causing each existing work to readjust its position in the larger group to accommodate the newcomer. In the line that stuck like a burr in my mind fourteen years ago, Eliot describes the past as being “altered by the present as much as the past directs the present.”

Eliot’s words helped me make sense of works’ resonance with one another. I read the Iliad (published 8th c. BC) early in the fall of freshman year, then the Aeneid (published ca. 19 BC) a couple of months later. Reading the Iliad first helped me understand the Aeneid better when I read it, but the reverse was also true–the more I learned about Virgil’s epic, the more I appreciated the enduring subtleties of Homer’s. I got through Don Quixote after a transformative slog, and, a few months later, Borges’ “Pierre Menard, Author of the Quixote” insinuated itself into my mind as a permanent companion to Cervantes’ masterpiece. It took me a while to get into Paradise Lost, but when I did, I was dumbfounded by how current it felt, and how much anti-hero characters across literature, film and television owed to Milton’s Satan.

A stained-glass window became the image that came to mind when I thought of Eliot’s order; each new addition took the form of a new piece of glass slotting into place–influencing, however minorly, the larger design, and subtly changing the apparent color of the panes to which it sat closest. Speed continued to feel like an issue, though. The stained-glass window in my head was constructing itself, but at what felt like a punishingly slow rate.

Frustration with this slowness, I believe, would have made consulting ChatGPT for a faster solution even more enticing. So would the resonance between how I imagined TS Eliot’s vast literary order and the vastness of LLM training datasets, which have often been visualized in similar network-like ways. The “Artificial Worldviews” website is a particularly beautiful example of this–a glowing map of subject-matter connections based on 1,764 queries to GPT 3.5. I thought of Artificial Worldviews as I created TS Eliot GPT, imagining myself using it as a college student, entering one central text after another to build my mental map of GPT 4’s literary knowledge.

I’ve been playing with TS Eliot GPT for several weeks now, and I can say, with confidence, that I would have avidly used it in college. What it lacks in depth or accuracy it makes up for in usability. The closest analogue I can think of to the experience of using TS Eliot GPT is probably surfing Wikipedia links, but that would be more cumbersome–a much less efficient way to gain familiarity with the names of works and authors in the Western canon.

Here’s the response TS Eliot GPT gives for Jane Austen’s Persuasion:

Introduction:

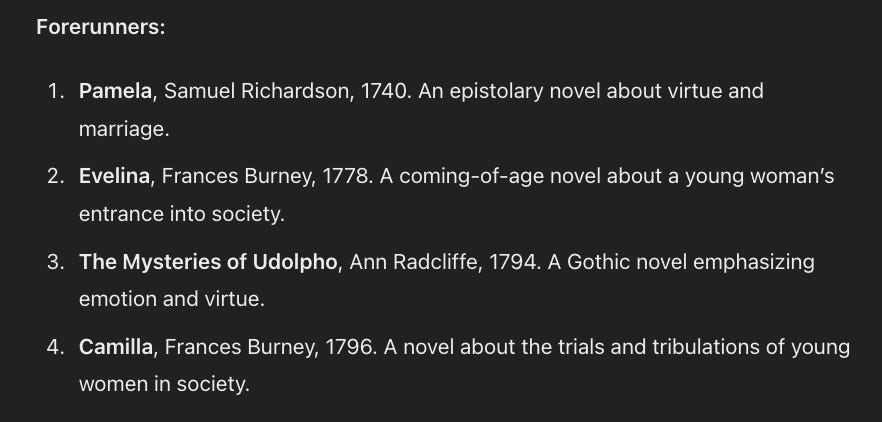

Forerunners:

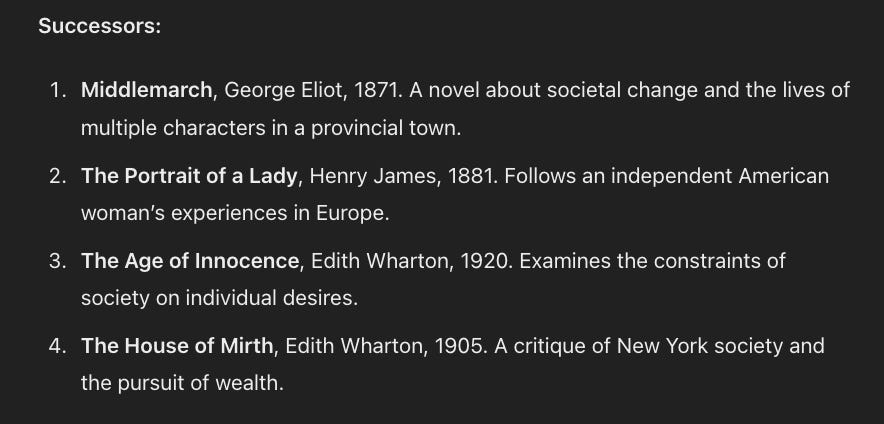

Successors:

Content Analysis (broken into 4 paragraphs):

Overview:

Forerunners Analysis:

Successors Analysis:

Influence Level:

All in all, a pretty decent showing considering the constraints at play–word count and LLMs’ limited abilities for literary analysis. The initial overview is accurate and concise. The inclusion of all eight forerunners and successors generally makes sense. The context analysis, though in many ways irritatingly vague, still touches on Persuasion’s main themes and its thematic linkages with the forerunner and successor works. And the designation of Persuasion as a major work is also, in my opinion, clearly the right decision–though I’m biased, given that it’s my favorite novel.

Some of the response’s main issues occur in the forerunners list. Three of the four forerunners listed adhere reasonably well to the instructions I wrote for TS Eliot GPT, specifying that forerunner texts must be “considered by reputed literary critics to be artistic forerunners of the central text.” Both Evellina and Camilla are good inclusions; Frances Burney, who wrote both, is frequently cited as one of Austen’s favorite authors. In fact, the strength of this historical and thematic connection is perhaps why TS Eliot GPT cites two of Burney’s works, despite this violating its instructions, which say that the custom GPT should only list one work per author. Pamela is another good inclusion; Samuel Richardson’s influence on Jane Austen is also frequently discussed in scholarly literature, especially in relation to her development of free-indirect discourse narration.

Ann Radcliffe’s The Mysteries of Udolpho, a gothic novel, however, does not fit the criteria specified in TS Eliot GPT’s requirements for forerunners. Radcliffe’s work bears little stylistic or thematic similarity to Persuasion. However, Austen does make many references to Udolpho in Northanger Abbey, her satire of the gothic novel genre. I’d speculate that ChatGPT, armed with reams of training data that often associates Udolpho with Austen, understands that there is a relationship between the two and links them, but has little way of understanding the qualitative difference of that relationship–direct allusion versus artistic influence.

Granted, this is a minor mistake. It would not have bothered me as a college freshman, if I noticed it at all. And it would have certainly not outweighed the many benefits I would have gained from TS Eliot GPT as a tool. For example, getting Samuel Richardson and Frances Burney on my radar as a freshman–I only heard of them as a senior, I believe–would have undoubtedly benefited me. The successors are also all canonical works thematically resonant with Austen’s; it would have helped to know that those names were important, and to learn basic information about their plots.

The output of TS Eliot GPT, obviously, reflects the biases of the Western canon. It would be unfair to find fault with the underlying model for this; the prompt I wrote loudly demands it. Still, I believe that having access to a tool like TS Eliot GPT might have only prolonged the time I spent restricted by the rigidities that drove me to create it.

Through the overuse of TS Eliot GPT, I might have been able to cling for much longer to the illusion that I could button-press my way to a substantive understanding of literature. Having a tool that explicitly marked works as minor, moderate or major could also have led me to make crucial decisions–what courses to take, what works to study further–based on the relative volume of a work’s mentions in a statistical model’s training data. In these ways, overreliance on ChatGPT could have created its own digital rabbit hole, like an algorithmic feed tailored to my own individual biases.

Using TS Eliot GPT in moderation, though, might have supplied me with clarifying insight. After perhaps an hour or two of using it to gain a facility with canonical names, though, it would have been best to transition to consulting it only occasionally, and always as a springboard for further research.

If the first half of my English degree focused on the absorption of literature, the second half focused on the dissection of it. This is fairly typical; higher-level literature courses assign much more secondary source reading than lower-level courses do, often to introduce students to schools of critical theory–New Historicism, Marxism, Feminism, Postcolonialism, and Deconstructionism, among others. During my junior and senior years, as I read such texts, I came to develop a research interest in the formation of the literary canon–how it, as an institution, dispenses value to certain texts over others.

To explore this interest and make sense of critical theory using ChatGPT, I would likely have created something like Critic Recommender GPT (see full prompt here). Like TS Eliot GPT, this custom GPT would first ask users to specify a central text. Then, instead of listing forerunners and successors, it would choose one critic it deems most relevant in discussions of the central text. This information, plus the further analysis provided in the meat of the response format, would be relayed as follows (again, this is a condensed version of the actual prompt instructions):

Central Text Overview: Introduction of central text title, author name, publication year, and brief discussion of the central theme. (40 words)

Critic Overview: Introduction of the critic that ChatGPT considers most relevant when examining the central text. (80 words)

Textual Analysis: Discussion of the specific aspects of the central text that are especially relevant to the critic; includes a direct quote from the critic, ideally one that brings up the central text. (120 words)

Meta Discussion: Describe the critic’s stance on the literary canon. Be as specific as possible. (80 words)

Critic Recommender GPT asks the model to conduct much more complex analysis than TS Eliot GPT does. Still, both GPTs would have drawn me in with the promise of accelerating my way to the same skill–an intuition of who the “important” voices are in a new subject domain.

Let’s review the analysis Critic Recommender GPT returns when fed Persuasion as a central text:

Central Text Overview:

Critic Overview:

Textual Analysis:

Meta Discussion:

These results, like TS Eliot GPT’s, are fairly helpful. The model’s choice of critic(s) makes sense; The Madwoman in the Attic is a seminal text of feminist criticism, and Gilbert and Gubar frequently discuss Austen and Persuasion in it. The analysis, though superficial, is correct on in its main points as well, emphasizing Gilbert and Gubar’s focus on Anne Elliot’s quiet resilience and the canonical marginalization of female authors.

There are two major issues with Critic Recommender GPT, however. The first is hallucination. The only direct quote attributed to The Madwoman in the Attic–“the anxieties of a woman living in a patriarchal culture manifest in her muted resistance to its dictates"–appears nowhere in the book, nor anywhere online.

Hallucinated quotes are a perennial problem with Critic Recommender GPT. For the central text of War and Peace, it recommends Mikhail Bakhtin and attributes this fictitious quote to the critic’s (real) book Problems of Dostoevsky’s Poetics: “In the polyphonic novel, the author’s voice is not the only one to speak; it is joined by the voices of the characters, who are full and equal participants in the novel’s dialogue.” My personal favorite hallucination so far occurred in Critic Recommender GPT’s result for Ulysses, which attributes this hallucinated quote to Harold Bloom: “Joyce's Ulysses is the greatest masterwork of modern narrative, synthesizing myth, history, and the quotidian in a unique textual tapestry.” There’s something funny about the idea of a relentless aesthete like Bloom using a phrase as clunky as “unique textual tapestry.”

Hallucinations like these are par for the course with GPT and other LLMs; many experts believe them to be a fundamental, non-fixable part of the models’ architecture. TS Eliot GPT hallucinates and defies its instructions occasionally as well, but it does so less often, and less egregiously than Critic Recommender GPT; the most common inaccuracies it dispenses are incorrect publication dates and odd-one-out forerunner/successor linkages (like with The Mysteries of Udolpho). TS Eliot GPT’s comparative accuracy is likely due to the fact that its instructions call for less depth and specificity. There are plot summaries and links between literary works all over the internet and literature for the model to draw on; in comparison, pinpointing the work of a specific critic that discusses a specific work requires much more precision. Encountering increasing levels of hallucinations as an upperclassman would be an indication of language models’ diminishing returns as I progressed through college; the more my research interests narrowed, the less I could trust LLMs to provide consistently accurate information on them.

The second issue with the custom GPT would have been less immediately irritating than the hallucinations, but in my opinion, more damaging in the long run. Critic Recommender GPT displays extreme bias towards certain prominent critics. When I elicited its responses to the top 50 ebooks (over the past 100 days) on Project Gutenberg, Critic Recommender recommended Mikhail Bakhtin and Harold Bloom five times each. See this spreadsheet for the full results of this casual experiment.

Bakhtin and Bloom certainly cast long shadows in the world of letters, but, in my opinion, no critic is prominent enough to take up 10% of mentions on their own. Their voices are even more prominently represented when you subtract the eight nonfiction books from the group of 50 ebooks, meaning Bakhtin and Bloom each constitute 12% of all mentions where the central text is literary. Other frequently mentioned voices are feminist critic Elaine Showalter and French philosopher Michel Foucault, who each got three mentions (6% each of total recommendations).

Critic Recommender GPT is also heavily biased towards old criticism and scholarship. Of the 33 unique critics the model chose, 25 are deceased. Of the 32 that had their birth years available online, all were born before 1951. This demonstrated priority to seniority runs counter to the general academic understanding that, in order for scholarship to be relevant, it must consider recent work done on the topic. By mostly invoking critics who wrote decades or more than a century ago, ChatGPT promotes a limitingly conservative view of the important voices in literary criticism.

ChatGPT did not invent systemic bias towards certain works; it reflects the human biases present in its training data. Still, the mysterious nature of LLMs’ functionings, combined with the lack of transparency by OpenAI and other foundation-model companies about their training data, present unique challenges when we attempt to examine the biases their models exhibit. For many years, I expect, scholars will be studying LLM outputs to reverse engineer an imperfect understanding of what these models value over others.

One such fascinating study is “The Chatbot and the Canon: Poetry Memorization in LLMs.” In it, Lyra D’Souza and David Mimno find that ChatGPT demonstrates inconsistent abilities to regurgitate poems word-for-word. They find that a work’s presence in a Norton Anthology–a modern indicator of a work’s canonical status if there ever was one–is the greatest predictor of whether ChatGPT will recite it accurately. They reflect:

Memorization has legal implications, such as copyright for texts that are not in the public domain. It also has cultural implications: the ability of a model to retrieve one text over another can perpetuate biases around the accessibility of digitized texts. Current literary priorities and aesthetics determine what literature is more prevalent online, and a larger online presence increases the chances of a text making its way into a pretraining dataset and thus being memorized. In this way, LLMs are poised to perpetuate the echoic nature of the literary canon within a new digital context.

Another paper about GPT-4’s literary memory, this time for books, reveals that the texts most frequently memorized by the model are popular copyrighted fantasy and science fiction, as well as public domain works. Comparatively, the authors write, GPT-4 “knows little about works of Global Anglophone texts, works in the Black Book Interactive Project and Black Caucus American Library Association award winners.” This disparity in memorization, they continue, “has the potential to bias the accuracy of downstream analyses.” For example, the LLM was significantly better at predicting the publication year of a work if it had also memorized it.

As an upperclassman, I would have likely noticed the bias in responses to Critic Recommender GPT. Bloom’s overrepresentation would have seemed especially ironic, too; I would have created Critic Recommender GPT to learn more about various types of criticism, only to find that the critic it most recommended was one openly contemptuous of most critical schools, dubbing them the “school of resentment.”

So, after all this, would it have been worth it to use ChatGPT as a recommendation tool as I studied for my college English degree? Overall, my verdict is a tentative yes, especially in the earlier years.

LLM chatbots can provide usefully customized primers of subjects. The mapping of canonical literary texts that TS Eliot GPT provided could have helped me contextualize the onslaught of names I was exposed to freshman year. It would have been important not to put too much stock in this, though; placing an excessive emphasis on acquiring facts can come at the expense of contextual knowledge. In general, it seems prudent to be skeptical of the pursuit of any type of knowledge that an LLM chatbot is much better suited to convey than a human. This is not because general knowledge is unimportant, but because attempting to go toe to toe with a computer in the memorization of it is an impossible task that can also come with subtler analytical limitations.

In college, once I realized how impractical it was to try and learn about all the “important” texts, I was forced to begin constructing my mental stained-glass window more effortfully: reading primary and secondary texts, asking questions in class and at office hours, having discussions with friends, noticing which texts were often juxtaposed in secondary sources. Learning to embrace this slower, more deliberate pace was a critical step towards my understanding of how actual knowledge is gained–to understand that my history instructor’s willingness to sacrifice two of his fingers was born of a grueling, close study of specific texts, not an abstract desire to know “what he should.”

It would have been important to extend this skepticism to ChatGPT’s content on the sentence level, as well. Trying to gauge potential bias levels in responses would be important, as would being vigilant about hallucinations. I would need to be sure to gut-check and fact-check the chatbot’s information before acting on it, and to make sure I wasn’t over-relying on it in lieu of hands-on research.

In the best case scenario, using ChatGPT might have helped me reflect on the nature of my focus. Writing prompts would have been a useful way to crystallize my learning goals–not something I ever explicitly did in college. In this way, custom GPT prompts would document my “knowledge wish-lists” at pivotal stages in my education. Being able to look back at them after months or years would have been a useful way to reflect on how my thinking had changed since then, and what the underlying assumptions of my thought patterns had been.

More than anything, it would have been important to remember to conserve my time as I used ChatGPT as a learning tool. It would be tempting to let the prompt iteration process go on forever, especially since instruction tweaking does lead to better outputs up to a certain point. Once the quality of the output started to plateau, however—and ideally even before that—I would have been better off telling myself to step away from the “generate” button and get to actual reading. The key question, though, is whether I would have had the discernment to notice this plateau, and the willpower to click away.

Hallucinating Bakhtin made me giggle. I feel like he would welcome the LLM as a retroactive voice in his own textual polyphony. Loved reading about your experiments!

Really appreciate your thoughtful experiment! But your thoughtfulness is exactly what may have made this useful to you and concerns me about student use on the whole. Most students don’t use Chat GPT with this level of metacognition. They just use it to complete assignments more quickly, and perhaps most importantly, copy and paste the ChatGPT answers into assignments like reading responses. This makes grading student comprehension challenging because the ChatGPT language has a high vocab level but basic sentence construction and variation and basic analysis. It’s also challenging because learning actually does need to take effort, and when students blow by the easier stuff in intro to English Lit, they tend to be less prepared when they get to classes that they really can’t ChatGPT. It lowers their resilience level when the learning gets more challenging and requires more critical thinking. Anyway, enough teacher complaints! I look forward to reading the rest of this series.